This is a written version of my keynote at The Official 2017 European Selenium Conference in Berlin, Germany.

How do your colleagues contribute to test automation?

Who is involved in the design, development and maintenance of your test suites?

What would happen if people in your team changed how they participate in test automation?

How could you influence this change?

This article will encourage you to consider these four questions.

Introduction

When I was 13 years old I played field hockey. I have a lot of fond memories of my high school hockey team. It was a really fun team to be part of and I felt that I really belonged.

When I was 10 years old I really wanted to play hockey. I have clear memories of asking my parents about it, which is probably because the conversation happened more than once. I can remember how much I wanted it.

What happened in those three years, between being a 10 year old who wanted to play hockey and a 13 year old creating fond memories in a high school hockey team? Three things.

The first barrier to playing hockey was that I had none of the gear. I lived in a small town in New Zealand, both my parents were teachers, and hockey gear was a relatively large investment for my family. To participate in the sport I needed a stick, a mouth guard, shin guards, socks. Buying all this equipment gave me access to the sport.

Once I had the gear, I needed to learn how to use it. It turned out that my enthusiasm for getting onto the field did not translate into a natural ability. In fact, initially I was quite scared of participating. I had to learn to hit the ball and trap it, the different positions on the field, and what to do in a short corner. Learning these skills gave me the confidence to play the game, which meant that I started to enjoy it.

The third reason that I ended up in a hockey team when I was 13 years old was because that is where my friends were. As a teenager, spending time with my friends after school was excellent motivation to be part of a hockey team.

Access, skills, and motivation. These separated me at 10 years of age from me at 13 years of age. These separated a kid who really wanted to participate in a sport from someone who felt like they were part of a team.

This type of division is relatable. Team sports are an experience that many of us share, both the feelings of belonging and those of exclusion. Access, skills, and motivation also underpin other types of division in our lives.

Division in test automation

If you look at a software development team at a stand-up meeting, they are all standing together. People are physically close to each other, not on opposite sides of a chasm. But within that group are divisions, and different divisions depending on the lens that you use.

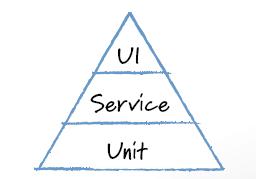

If we think about division in test automation for a software development team then, given what I’ve written about so far, you imagine something like this:

|

| A linear diagram of division |

People are divided into categories and progress through these pens from left to right. To be successful in test automation I need access to the source code, I need the skills to be able to write code, and I need to be enthusiastic about it. Boom!

Except, perhaps it’s not that simple or linear.

What if I’m a new tester to a team, and I have the coding skills but not permissions to contribute to a repository? What if I’m enthusiastic, but have no idea what I’m doing? It’s not always one, then the other, then the other. I am not necessarily going to acquire each attribute in turn.

Instead, I think the division looks something like this.

|

| A Venn diagram of division |

A Venn diagram of division by access, skills and motivation. An individual could have any three of these, or any combination of the three, or none at all.

To make sense of this, I’d like to talk in real-life examples from teams that I have been part of, which feature five main characters:

|

| Five characters of an example team |

The gray goose represents the manager. The burgundy red characters represent the business: the dog is the product owner, the horse is the business analyst. Orange chickens are the developers, and the yellow deer are the testers.

|

| Team One |

Team One

This was an agile development team in a large financial institution. I was one of two testers. We were the only two members of the team who were committing code to the test automation suite. We are the two yellow deer right in the middle of the Venn diagram with access, skills and motivation.

The developers in this team could have helped us, they had all the skills. They didn’t show any interest in helping us, but also we didn’t give them access to our code. The three orange chickens at the top of the Venn diagram show that the developers had skills, but no motivation or access.

The business analyst didn’t even know that we had test automation, and there was no product owner in this team. However there was a software development manager and they were a vocal advocate for the test automation to exist, though they didn’t understand it. The burgundy red horse at the top right is outside of the diagram, the grey goose is in motivation alone.

The test automation that this farmyard created was low-level, it executed queries against a database. As we were testing well below the user interface where the business felt comfortable, they were happy to have little involvement. The code in the suite was okay. It wasn’t as good as it could have been if the developers were more involved, but it worked and the tests were stable.

|

| Team Two |

Team Two

This was a weird team for me. You can see that as a tester, the yellow deer, I had the skills and motivation to contribute to test automation, but no access. I was bought in as a consultant to help the existing team of developers and business analysts to create test automation.

The developers and business analysts had varying skills. There were a couple of developers who were the main contributors to the suites. The business analysts had access and were enthusiastic, but they didn’t know how to write or read code. Then there were a couple of developers who had the access and skills, but firmly believed that test automation was not their job, they’re the chickens on the left without motivation.

This team built a massive backlog of technical debt in their test automation because the developers who were the main contributors preferred to spend their time doing development. The test code was elegant, but the test coverage was sparse.

|

| Team Three |

Team Three

In this team everyone had access to the code except the project manager, but skills and motivation created division.

I ended up working on this test suite with one of the business analysts. He bought all the domain and business knowledge, helped to locate test data, and made sure that the suites had strong test coverage across all of the peculiar scenarios. I bought the coding skills to implement the test automation.

In this team I couldn’t get any of the developers interested in automation. Half had the skills, half didn’t, but none of them really wanted to dive in. The product owner and the other BA who had access to the code were not interested either. They would say that they trusted what the two of us in the middle were producing, so they felt that they didn’t need to be involved.

I believe that the automation we created was pretty good. We might have improved with the opportunity to do peer review within a discipline. The business analyst reviewed my work, and I reviewed his, but we didn’t have deep cross-domain understanding.

|

| Team Four |

Team Four

This was a small team where we had no test automation. We had some unit tests, but there wasn’t anything beyond that. This meant that we did a lot of repetitive testing that, in retrospect, seems a little silly.

I was working with two developers. We had a business analyst and a product owner, but no other management alongside us. The technical side of the delivery team all had access to the code base and the skills to write test automation, but we didn’t have time or motivation to do so. The business weren’t pushing for it as an option.

You may have heard similarities to your situation in these experiences. Take a moment to consider your current team. Where would you put your colleagues in a test automation farmyard?

Contributors to test automation

Next, think about how people participate in test automation dependent on where they fall into this model. Originally I labelled the parts of the diagram as access, skills, and motivation:

If I switch these labels to roles they might become:

A person who only has access to test automation is an observer. They're probably a passive observer, as they don't have the skills or the motivation to be more involved.

A person who only has skills is a teacher. They don't have access or motivation, but they can contribute their knowledge.

A person who only has motivation is an advocate. They're a source of energy for everyone within the team.

Where these boundaries overlap:

A problem solver is someone with access and skills who is not motivated to get involved with test automation day-to-day. These people are great for helping to debug specific issues, reviewing pull requests, or asking specific questions about test coverage. Developers often sit in this role.

Coaches have skills and motivation, but no access. They’re an outside influence to offer positive and hopefully useful guidance. If you consider a wider set of colleagues, you might treat a tester from another development team as a coach.

Inventors are those who have access and motivation, but no skills. These are the people who can see what is happening and get super excited about it, but don’t have skills to directly participate. In my experience they’ll throw out ideas, some are crazy and some are genius. These people can be a source of innovation.

And in the middle are the committers. These are generally the people who keep the test suite going. They have access, skills, and motivation.

|

| How people contribute to test automation |

Changing roles

Now that you have labels for the way that your colleagues contribute to your test automation, consider whether people are in the "right" place.

I’m not advocating for everyone in your team to be in the middle of the model. Being a committer is not necessarily a goal state, there is value in having people in different roles. However there might be specific people who you can shift within the model that would create a big impact for your team.

Consider how people were contributing to test automation in the four teams that I shared earlier.

|

| Team One |

In team one, all the developers were teachers. They had skills, but nothing else. In retrospect if I was choosing one thing to change here, we should have given at least one of the developers access to the code so that they could step into a problem solving role and provide more hands-on help.

|

| Team Two |

In team two, I found it frustrating to coach the team without being able to directly influence the code. In retrospect, I could have fought harder for access to the code base. I think that as a committer I would have had greater impact on the prioritisation of testing work and the depth of test coverage provided by automation.

|

| Team Three |

In team three, it would have been good to have a peer reviewer from the same discipline. Bringing in a developer to look at the implementation of tests, and/or another business analyst to look at business coverage of the tests, could have made our test automation more robust.

|

| Team Four |

In team four, we needed someone to advocate for automation. Without a manager, I think the product owner was the logical choice for this. If they’d been excited about test automation and created an environment where it had priority, I think it would have influenced the technical team members towards automation.

Think about your own team. Who would you like to move within this model? Why? What impact do you think that would have?

Scope of change

To influence, you first need to think about what specifically you are trying to change. Let's step back out to the underlying model of skills, access, motivation. These three attributes are not binary themselves. If you are trying to influence change for a person in one of these dimensions, then you need to understand what exactly you are targeting, and why.

Access

What does it mean to have access to code? Am I granted read-only permissions to a repository, or can I edit existing files, or even create new ones? Does access include having licenses to tools, along with permission to install and setup a development environment locally.

In some cases, perhaps access just means being able to see a report of test results in a continuous integration server like Jenkins. That level of access may be enough to involves a business analyst or a product owner in the scope of automated test coverage.

When considering access, ask:

- What are your observers able to see?

- What types of problems can your problem solvers respond to?

- How does access limit the ideas of your inventors?

Skill

Skill is not just coding skill.

Ash Winter has developed a wheel of testing which I think is a useful prompt for thinking more broadly about skill:

|

| Wheel of Testing by Ash Winter |

Coding is one skill that helps someone contribute to test automation. Skill also includes test design, the ability to retrieve different types of test data, creating a strategy for test automation, or even generating readable test reports.

How do the skills of your teachers, coaches, and problem solvers differ? Where do you have expertise, and where is it lacking? What training should your team seek?

Motivation

Motivation is not simply "I want test automation" or "I don’t want test automation". There's a spectrum - how much does a person want it? You might have a manager who advocates for 100% automated test coverage, or a developer who considers anything more than a single UI test to be a waste of time.

How invested are your advocates? Should they be pushing for more or backing off a little?

How engaged are your coaches and inventors?

Wider Perspective

The other thing to consider is who isn’t inside the fences at all. The examples that I shared above featured geese, dogs, horses, chickens, deer. Who is not in this list? Are there other animals around your organisation who should be part of your test automation farmyard?

Test automation may be helpful for your operations team to understand the behaviour of a product prior to its release. If you develop executable specifications using BDD, or something similar, could they be shared as a user manual for call centre and support staff?

A wider perspective can also provide opportunities for new information to influence the design of your test automation. Operations and support staff may think of test scenarios that the development team did not consider.

Conclusion

Considering division helps us to feel empathy for others and to more consciously split ourselves in a way that is "right" for our team. Ask whether there are any problems with the test automation in your team. If there are no problems, do you see any opportunities?

Next, think about what you can do to change the situation. Raise awareness of the people around you who don't have access that would be useful to them. Support someone who is asking for training or time to contribute to automation. Ask or persuade a colleague to move themselves within the model.

Testers have a key skill required to be an agent of change, we ask questions daily.

How do your colleagues contribute to test automation?

Who is involved in the design, development and maintenance of your test suites?

What would happen if people in your team changed how they participate in test automation?

How could you influence this change?