The Experiment Framework

After researching pair testing, I decided to create a structured framework for experimenting with pairing. I felt there was a need to set clear expectations in order for my 20+ testers to have a consistent and valuable pairing experience.This did felt a little dictatorial, so I made a point of emphasizing the individual responsibility of each tester to arrange their own sessions and control what happened within them. There has been no policing or enforcement of the framework, though most people appear to have embraced the opportunity to learn beyond the boundaries of their own agile team.

I decided that our experiment will run for three one-month iterations. Within each month, each pair will work together for one hour per week, alternating each week between the project team of each person in the pair. As an example, imagine I pair Sandi in Project A is paired with Danny in Project B. In the first week of the iteration they will pair test Project A at Sandi's desk, then in the second week they will pair test Project B at Danny's desk, and so on. At the end of the monthly iteration each pair should have completed four sessions, two in each project environment.

In between iterations, the team will offer their feedback on the experiment itself and the pairing sessions that they have completed. As we are yet to complete a full iteration I'm looking forward to receiving this first round of feedback shortly. I intend to adapt the parameters of the experiment before switching the assigned pairs and starting the second iteration.

At the end of the three months I hope that each person will have a rounded opinion about the value of pairing in our organisation and how we might continue to apply some form of pairing for knowledge sharing in future. At the end of the experiment, we're going to have an in-depth retrospective to determine what we, as a team, want to do next.

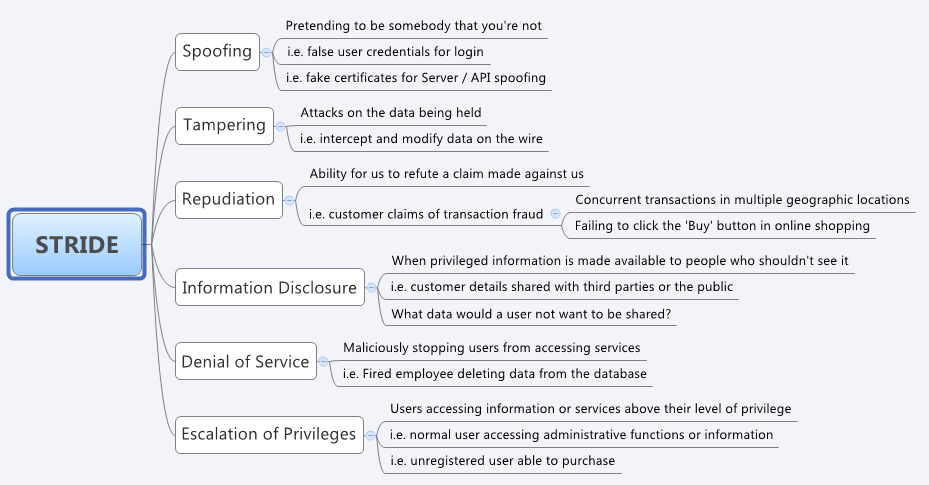

|

An example of how one tester might experience the pairing experiment |

A Sample Session

In our pair testing experiment, both the participants are testers. To avoid confusion when describing a session, we refer to the testers involved as a native and a visitor.The native hosts the session at their work station, selects a single testing task for the session, and holds accountability for the work being completed. The native may do some preparation, but pairing will be more successful if there is flexibility. A simple checklist or set of test ideas is likely to be a good starting point.

The visitor joins the native to learn as much as possible, while contributing their own ideas and perspective to the task.

During a pairing session there is an expectation that the testers should talk at least as much as they test so that there is shared understanding of what they're doing and, more importantly, why they are doing it.

When we pair, a one hour session may be broken into the following broad sections:

10 minutes – Discuss the context, the story and the task for the session.

The native will introduce the visitor to the task and share any test ideas or high-level planning they have prepared. The visitor will ask a lot of questions to be sure that they understand what the task is and how they will test it.

20 minutes – Native testing, visitor suggesting ideas, asking questions and taking notes.

The native will be more familiar with the application and will start the testing session at the keyboard. The native should talk about what they are doing as they test. The visitor will make sure that they understand every action taken, ask as many questions as they have, and note down anything of interest in what the native does including heuristics and bugs.

20 minutes – Visitor testing, native providing support, asking questions and taking notes.

The visitor will take the keyboard and continue testing. The visitor should also talk about what they are doing as they test. The native will stay nearby to verbally assist the visitor if they get confused or lost. Progress may be slower, but the visitor will retain control of the work station through this period for hands-on learning.

10 minutes – Debrief to collate bug reports, reflect on heuristics, update documentation.

After testing is complete it’s time to share notes. Be sure that both testers understand and agree on any issues discovered. Collate the bugs found by the native with those found by the visitor and document according to the traditions of the native team (post-it, Rally, etc.). Agree on what test documentation to update and what should be captured in it. Discuss the heuristics listed by each tester, add any to the list that were missed.

After the session the visitor will return to their workstation and the pair can update documentation and the wiki independently.

To support this sample structure and emphasise the importance of communication, the following graphic that included potential questions to ask in each phase was also given to every tester:

|

| Questions to ask when pair testing |

I can see possibilities for this experiment to work for other disciplines - developers, business analysts, etc. I'm looking forward to seeing how the pairing experiment evolves over the coming months as it molds to better fit the needs of our team.