|

| "I think we have an issue" -- Delivering unwelcome messages Fiona Charles |

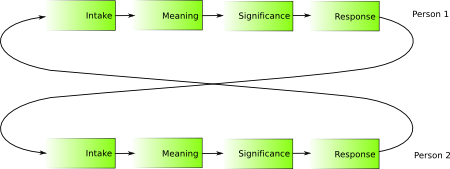

The Satir Interaction Model describes what happens inside us as we communicate — the process we go through as we take in information, interpret it, and decide how to respond [2]. In the model above, there are four fundamental steps to going from stimulus to reply: intake, meaning, significance, then response [3].

These four steps are the Gerald Weinberg simplification of the original work of Virginia Satir. Weinberg has written about the Satir Interaction Model in the context of technical leadership, while Satir wrote for an audience of family therapists [4]. When compared side-by-side, the original work included some additional steps:

|

| Satir Interaction Model Steven M. Smith |

Resolving communication problems

The Satir Interaction Model can be used to dissect communication problems. It can help us to identify what went wrong in an interaction and provides an approach to resolve the issue immediately [5].Many communication problems occur when a response is received that is beyond the bounds of what was expected. Because the steps in the model between intake and response are hidden, the end result of the process that assigns meaning and significance can be quite surprising to the recipient, which can be a catalyst for conflict.

I like the J. B. Rainsberger example of applying the Satir Interaction Model to a conversation where someone is wished a "Happy Holidays". The responses to this intake may vary wildly based on the meaning and significance that people assign to this phrase. [3]

- "How dare this person insult Christmas and deny the Christ...!"

- "If only you'd bother to learn to pronounce 'Chanukah'..."

- "Have you ever even heard of Candlemas...?!"

When applying the model to resolve misunderstanding, Dale H. Emery says:

I focus first on my own process, because the errors I can most easily correct are the ones that I make. When I see and hear clearly, interpret accurately, assign the right significance, and accept my feelings, I understand other people's messages better, and my responses are more effective and appropriate. And when I understand well, I am better able to notice when other people misinterpret my messages, and to correct the misunderstanding. [2]

Finally, Judy Bamberger offers a very useful companion resource for adopting the Satir Interaction Model in practice [5]. She provides ideas about what could go wrong at each step in the model and offers practical suggestions for how to recover from errors, problems, or misunderstandings.

Association to Myers-Briggs

Weinberg drew a link between the Myers-Briggs leadership styles and the Satir Interaction Model that may be useful for adapting communication styles with different types of people. He suggests that:The NT visionaries and NF Catalysts, both being Intuitive, skip quickly over the Intake step. … NTs tend to go instantly to Meaning, while the NFs tend to jump immediately to Significance. … The SJ Organizers stay in Intake mode too long … The SP Troubleshooters actually use the whole process rather well … (pp 108 & 109.) [6]

Weinberg then offers the following questions to prompt each type of person to apply each step of the Satir Interaction Model:

For NTs/NFs ask, “What did you see or hear that led you to that conclusion?”

For SJs ask, “What can we conclude from the data we have so far?”

For SPs appeal to their desire to be clever and ask them to teach you how they did it. [7]

Distinguish reaction from response

Willem van den Ende draws the Satir Interaction Model so that both sides of the interaction are shown: |

| "Debugging" sessions Willem van den Ende |

Using this illustration he specifically differentiates between a reaction a response. A reaction happens when a person skips the meaning and significance stages, and simply jumps straight from intake to response. When both people in an interaction become reactive instead of responsive a fight is the likely result [8]. Understanding that these missing steps may be the cause of a misunderstanding could help resolve the situation.

Unacceptable Behaviour

The Satir Interaction Model may also be useful in structuring conversations to address unacceptable behaviour. Esther Derby suggests that these conversations should begin by getting agreement that the behaviour happened, followed by discussion about the impact of the behaviour, then conclude by allowing the recipient of the message to realise that their behaviour is counter-productive [9].References

[1] "I think we have an issue" - Delivering unwelcome messages, Fiona Charles[2] Untangling Communication, Dale H Emery

[3] Don't Let Miscommunication Spiral Out Of Control, J B Rainsberger

[4] Satir Interaction Model, Steven M. Smith

[5] The Satir Interaction Model, Judy Bamberger

[6] Debugging System Boundaries: The Satir Interaction Model, Donald E. Gray

[7] Why Not Ask Why? Get Help From the Satir Interaction Model, Donald E. Gray

[8] "Debugging" sessions, Willem van den Ende

[9] intake->meaning->feeling->response, Esther Derby