That conversation lead to this poll on Twitter:

Do you use 'end-to-end' test to mean:— Katrina Clokie (@katrina_tester) July 11, 2017

1) test of full infrastructure stack, or

2) test of entire customer workflow?

The poll results show that roughly a quarter of respondents considered end-to-end testing to be primarily about the infrastructure stack, while the remaining majority considered it from the perspective of their customer workflow. Odds are that this ratio means I'm not the only person who has experienced a confusing conversation about end-to-end tests.

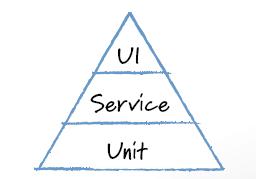

I started to think about the complexity that is hidden at the top of the testing pyramid. The model states that the smallest number of tests to automate are those at the peak, labelled as end-to-end (E2E) or user interface (UI) tests.

|

| Testing Pyramid by Mike Cohn |

These labels are used in the test pyramid interchangeably, but end-to-end and user interface testing are not synonymous. I can think of seven different types of automation that might be labelled by one or either of those two terms:

|

| Seven example of user interface and/or end-to-end testing |

The table above might be confusing without examples, so here are a few from my own experience.

1. User interface; Not full infrastructure stack; Not entire workflow

In my current organisation we have a single page JavaScript application that allows the user to perform complex interactions through modal overlays. We run targeted tests against this application, through the browser, using static responses in place of our real middleware and mainframe systems.

This suite is not end-to-end in any sense. We focus on the front-end of our infrastructure and test pieces of our customer workflows. We could call this suite user interface testing.

2. User interface; Full infrastructure stack; Not entire workflow

I previously worked with an application that was consuming data feeds from a third party provider. We were writing a user interface that displayed the results of calculations that relied on these feeds as input. We had a suite of tests that ran through the user interface to test each result in isolation.

Multiple calculations made up a workflow, so the tests did not cover an entire customer journey. However they did rely on the third-party feed being available to return test data to our application, so they were end-to-end from an infrastructure perspective. In this team we used the terms user interface tests and end-to-end tests interchangeably when talking about this suite.

3. User interface; Not full infrastructure stack; Entire workflow

In my current organisation we have an online banking application written largely in Java. Different steps of a workflow, such as making a payment, each display separately on a dedicated page. We have a suite of tests that run through the common workflows to test that everything is connected correctly.

This suite executes tests through the browser against the deployed web application, but uses mocked responses instead of calling the back-end systems. It is a suite that targets workflows. We could call this a user interface or end-to-end suite.

4. User interface; Full infrastructure stack; Entire workflow

In the same product as the first example, there is another suite of automation that runs through the user interface against the full infrastructure stack to test the entire customer workflow. We interact with the application using test data that is provisioned across all the dependent systems and the tests perform all the steps in a customer journey. We could call this suite user interface or end-to-end testing.

5. No user interface; Full infrastructure stack; Not entire workflow

In my current organisation we have a team working on development of open APIs. There is no user interface for the API as a product. The team have a test suite that interacts with their APIs and relies on the supporting systems to be active: databases, middleware, mainframe, and dependencies to other APIs.

These tests are end-to-end from an infrastructure perspective, but their test scope is narrow. They interrogate successful and failing responses for individual requests, rather than looking at the sequence of activities that would be performed by a customer.

6. No user interface; Not full infrastructure stack; Entire workflow

Earlier in my career I worked in telecommunications. I used to install, configure, and test the call control and charging software within cellphone networks. We had an in-house test tool that we could use to trigger scripted traffic through our software. This meant that we could bypass the radio network and use scripts to test that a call would be processed correctly, from when a person dialed the number to when they ended the call, without needing to use a mobile device.

These automated tests were end-to-end tests from a workflow perspective, even though there was no user interface and we weren't using the entire network infrastructure.

7. No user interface, Full infrastructure stack; Entire workflow

The API team in the fifth example have a second automated suite where a single test performs multiple API requests in sequence, passing data between calls to emulate a customer workflow. These tests are end-to-end from both the infrastructure and the customer experience perspective.

As these examples illustrate, end-to-end and user interface testing can mean different things depending on the product under test and the test strategy adopted by a team. If you work in an organisation where you label your test automation with one of these terms, it may be worth checking that there is truly a shared understanding of what your tests are doing. Different perspectives of test coverage can create opportunities for bugs to be missed.